How Machine Learning is Teaching Autonomous Vehicles To Factor in Driver Error

Univ.-Prof. Dr.-Ing. Lutz Eckstein is chair and director of the Institute for Automotive Engineering at RWTH Aachen University in Germany. An expert in the role that Machine Learning and Artificial Intelligence play in the journey of the autonomous vehicle industry, he talks to Intertraffic about how ML and AI are helping AVs to adapt to the entirely alien world of human mistakes.

For over two decades Intelligent Transport Systems experts have warned of the potential “issues” that will arise when a certain percentage mix of autonomous vehicles and human-driven vehicles is achieved on our roads.

That scenario is still some way off, of course (and there are differing opinions as to what that aforementioned percentage mix actually is), but one of the topics that is always in play is how intelligent, autonomous vehicles that drive ‘perfectly’ in terms of their speed and road positioning will cope with cars driven by people that are easily distracted and prone to making wrong judgements. We are all familiar with the term “human error”, after all.

One of those experts is Univ.-Prof. Dr.-Ing. Lutz Eckstein, chair and director of the Institute for Automotive Engineering at RWTH Aachen University in Germany. Eckstein and his team are working diligently to maximise the role of machine learning in helping autonomous vehicles to understand human driver behaviour patterns. Understanding human error and learning how, when and why they occur will, ultimately, make the task of driving inherently safer.

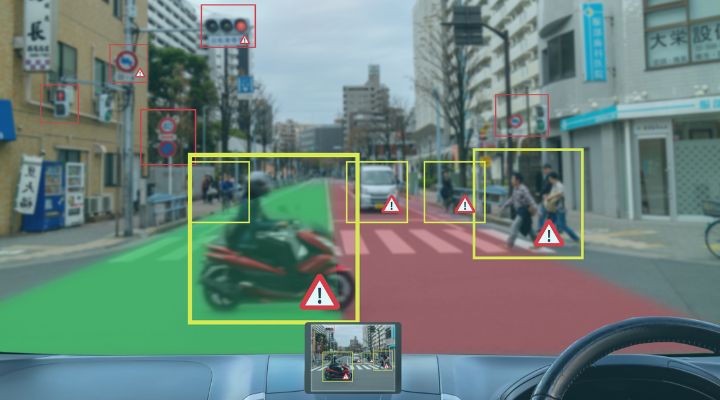

“Look at how information processing works in an autonomous vehicle: first we have perception. The result of perception is that the vehicle knows what the traffic environment looks like in terms of participants, such as other road users, pedestrians, and so on. And then the most important step before taking a tactical decision whether to stop or overtake or how to behave is that the vehicle needs to be capable of predicting how other road users will behave,” Prof Eckstein explains.

The result of perception is that the vehicle knows what the traffic environment looks like in terms of participants, such as other road users and pedestrians

“An example of this would be distinguishing a novice driver from an experienced driver. We have to learn this as human drivers, too, of course. This is where AI plays a significant role. By using data from different sources, we train deep neural networks to predict the behaviour of pedestrians, cyclists, and other road users. And the interesting thing here is that these deep neural networks are quite robust in terms of perspective, so we can now use drone data to predict pedestrian behaviour. And we can then use these trained networks inside vehicles in order to help us in predicting this behaviour.

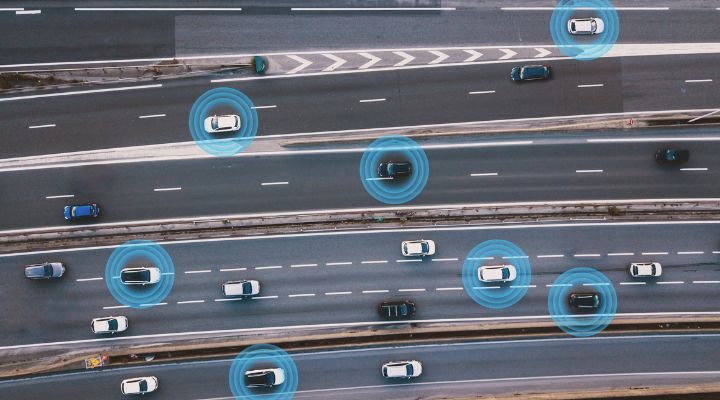

“We also factor in data from roadside units,” he continues, “and, of course, data from inside the vehicles themselves. However, I think it's important to say that you shouldn't be too worried about how these automated vehicles are going to behave.”

What is the benefit of Autonomous Vehicles?

So is Prof Eckstein suggesting that 20+ years of furrowed brows and collective chin-stroking has been for nothing?

“Well, from a technical point of view, AVs have the advantage that they look around, they have a 360-degree view all the time, in contrast to us as humans. AVs don't get tired; they don't drink or use drugs. Their behaviour is constant and always on a quite high level. So technically, we have very good prerequisites in order to design autonomous vehicles, traffic participants, who behave in a performant way.”

I think it's important to say that we shouldn't be too worried about how automated vehicles are going to behave

To summarise, machine learning and artificial intelligence are teaching autonomous vehicles to expect, or at least not be surprised by, mistakes that are caused by the human beings at the wheel of other cars that are sharing the road space. This is, in traffic technology terms, utopia. However, we can all think of some very high profile instances where autonomous cars have been involved in accidents, some with tragic consequences.

“We have seen a few cases where this did not work. And here we come to differentiation of legal systems. In Europe, we have a regulatory framework which will, in my view, avoid 99.999% of these incidents cases, as we've seen in the United States, because in Europe, and under the regime of the United Nations, you can't simply self certify. As a manufacturer in the US you can think that your product is safe enough and then NHTSA (National Highway Traffic Safety Administration) only becomes active after something has gone wrong. It’s the other way round in Europe,” he adds. “Here we have a situation where regulators ask companies to show them how their autonomous vehicle is going to behave safely.”

In Europe we have a regulatory framework which will, in my view, avoid 99.999% of these incident cases, as we've seen in the United States, because in Europe, and under the regime of the United Nations, you can't simply self certify

How does Machine Learning teach AVs to understand complex human behaviours?

It’s a long-held belief that drivers ‘learn’ to drive after passing their test, and it’s the experience of single-handedly piloting a machine around a road network alongside other people doing the same thing that teaches you all the tricks that you require to perform this task successfully with as few incidents as possible over the next 70 years. After a few years of driving, you develop a ‘sixth sense’ where you just get a feeling, a premonition if you will, that a car is going to pull out in front of you, preparing you for an impending accident and giving you sufficient time to do your utmost to avoid it. How does machine learning teach vehicles to understand that when humans struggle to understand it themselves?

“This is exactly what we work on here,” Prof Eckstein responds immediately. “Although in Germany we call that the seventh sense. What we do here in our research is design algorithms that give automated vehicles the same capabilities as experienced humans. We humans think about what has happened at an intersection afterwards, and then we reflect on a better behaviour, so that's exactly what automated vehicles need to do. They have to make a tactical decision from a performance directory.”

What we do here in our research is design algorithms that give automated vehicles the same capabilities as experienced humans

THE HUMAN/MACHINE INTERFACE

This is where we, as humans, interacting with machines, have to hope that the machines have made the right choice. And if they haven’t, we have to hope even more keenly that their incorrect decision doesn’t dovetail with our incorrect decision and lead to a chaotic situation.

Says Prof Eckstein: “This is where the car evaluates whether or not the tactical decision it made was the best tactical decision it could have made, but the good thing here is, and it's the real advantage of autonomous vehicles, that we work on a concept where we share this experience. As a human you make a decision based on your conclusion when approaching an intersection, that due to it being midday and near a school, children may well be coming out of this approaching building. The speed limit might be 50 km/h but you know you should only drive at 30 because this scenario happens there on a regular basis.”

This, then, would sound very much like autonomous vehicles learning valuable life lessons similar to their human counterparts, unless that is simplifying this complex subject slightly too much. Prof Eckstein suggests that it isn’t.

“What we are trying to do is add an additional layer of ‘collective experience’ to an HD map. If the autonomous vehicle is approaching the intersection above, is there any specific information and experience for this action? If there is, can we make use of it in the practical decision-making process and behave as if this autonomous vehicle has crossed this intersection 1000 times. This is a concept that we have started to work on in the project. It's still at the research stage but we really think that this has great potential.”

What we are trying to do is add an additional layer of ‘collective experience’ to an HD map. If the autonomous vehicle is approaching an intersection, is there any specific information and experience for this action?

AVs have DEMONSTRABLY PROVEN their value

AVs have certainly come a long away from the various Demos in the 1990s where driverless cars essentially followed trails of magnets embedded in the surface of unfinished motorways in San Diego (Demo 97) and Leiden (Demo 98), 40km south west of Amsterdam. In 2024 we are happily discussing the idea of cars learning from human mistakes and, essentially, having to be open-minded to all possibilities.

“With any human driver, as you know, there are clearly limits. This is the reason why we say it ultimately makes sense to compare human performance the tactical and reactive performance of automated vehicles. The big question you are asking really is ‘how well should automated vehicles drive?’ This is what we have been asking in our research, but car companies have now started to work on this well. We are trying to model human behaviour and human driver behaviour in order to have a reference,” explains Eckstein.

“The goal is basically to have a model that depicts the tactical and reactive performance of many thousands of drivers, from high-performance drivers on the right side of the graph to those on the left who tend to drive slowly, like my parents who are still driving in their 80s. Once you have this ‘distribution’ of performance, you are capable of showing that using these driver models, that the automated systems are driving more safely than 95 per cent of human drivers.”

We need to have sufficient evidence in order to show that an automated vehicle system has capabilities that are better than 95% of all human drivers we know

“What we are all trying to avoid, what we are working towards,” concludes Prof Eckstein, “is a situation where someone has been struck by an automated vehicle and killed and we are in front of a jury in a court. What we would have to answer is ‘would a human driver have had the ability to avoid the crash?’, and then we need to have sufficient evidence in order to show that this system has capabilities that are better than 95% of all drivers we know and that in all probability this unfortunate person would have been killed by a human driver as well. It was not a matter of whether the car had been driven by an automated system or by a person, but the accident was such a difficult constellation of circumstances that it was unavoidable.”